playwright-代理相关

调用自己函数

https://github.com/langflow-ai/langflow/discussions/1272

错误

Fernet key must be 32 url-safe base64-encoded bytes.

https://github.com/langflow-ai/langflow/discussions/1521

在这里生成, 更新环境变量

- name: "LANGFLOW_SECRET_KEY"

value: "xxxxx="

然后 重新部署

json format 时候

'messages' must contain theword 'json' in some form, to use 'response_format' of type 'json_object

提示词 需要包含

import os

from openai import AzureOpenAI

client = AzureOpenAI(

azure_endpoint = os.getenv("AZURE_OPENAI_ENDPOINT"),

api_key=os.getenv("AZURE_OPENAI_API_KEY"),

api_version="2024-03-01-preview"

)

response = client.chat.completions.create(

model="gpt-4-0125-Preview", # Model = should match the deployment name you chose for your 0125-Preview model deployment

response_format={ "type": "json_object" },

messages=[

{"role": "system", "content": "You are a helpful assistant designed to output JSON."},

{"role": "user", "content": "Who won the world series in 2020?"}

]

)

print(response.choices[0].message.content)

"Error building Component OpenAI:\n\nExpected mapping type as input to ChatPromptTemplate. Received <class 'list'>

这个错误提示表明在构建 OpenAI 组件时,ChatPromptTemplate 预期接收一个映射类型(mapping type,如字典),但实际上接收到了一个列表(list)。这种类型不匹配导致了错误。

要解决这个问题,您可以检查代码中传递给 ChatPromptTemplate 的参数,确保传递的是一个字典而不是列表。您可以通过以下步骤来调整代码:

- 确保传递给 ChatPromptTemplate 的参数是一个字典,例如:

prompt_template = {

"key1": "value1",

"key2": "value2"

}

- 将这个字典作为参数传递给 ChatPromptTemplate,而不是一个列表:

chat_prompt_template = ChatPromptTemplate(prompt_template)

通过以上步骤,您可以修复这个错误,并确保 ChatPromptTemplate 正确接收到预期的输入类型。如果还有其他问题或需要进一步帮助,请随时告诉我!

Error building Component OpenAI:\n\ndictionary update sequence element #0 has length 1; 2 is required

langflow使用简介

调用自己函数

https://github.com/langflow-ai/langflow/discussions/1272

错误

Fernet key must be 32 url-safe base64-encoded bytes.

https://github.com/langflow-ai/langflow/discussions/1521

在这里生成, 更新环境变量

- name: "LANGFLOW_SECRET_KEY"

value: "xxxxx="

然后 重新部署

json format 时候

'messages' must contain theword 'json' in some form, to use 'response_format' of type 'json_object

提示词 需要包含

import os

from openai import AzureOpenAI

client = AzureOpenAI(

azure_endpoint = os.getenv("AZURE_OPENAI_ENDPOINT"),

api_key=os.getenv("AZURE_OPENAI_API_KEY"),

api_version="2024-03-01-preview"

)

response = client.chat.completions.create(

model="gpt-4-0125-Preview", # Model = should match the deployment name you chose for your 0125-Preview model deployment

response_format={ "type": "json_object" },

messages=[

{"role": "system", "content": "You are a helpful assistant designed to output JSON."},

{"role": "user", "content": "Who won the world series in 2020?"}

]

)

print(response.choices[0].message.content)

"Error building Component OpenAI:\n\nExpected mapping type as input to ChatPromptTemplate. Received <class 'list'>

这个错误提示表明在构建 OpenAI 组件时,ChatPromptTemplate 预期接收一个映射类型(mapping type,如字典),但实际上接收到了一个列表(list)。这种类型不匹配导致了错误。

要解决这个问题,您可以检查代码中传递给 ChatPromptTemplate 的参数,确保传递的是一个字典而不是列表。您可以通过以下步骤来调整代码:

- 确保传递给 ChatPromptTemplate 的参数是一个字典,例如:

prompt_template = {

"key1": "value1",

"key2": "value2"

}

- 将这个字典作为参数传递给 ChatPromptTemplate,而不是一个列表:

chat_prompt_template = ChatPromptTemplate(prompt_template)

通过以上步骤,您可以修复这个错误,并确保 ChatPromptTemplate 正确接收到预期的输入类型。如果还有其他问题或需要进一步帮助,请随时告诉我!

Error building Component OpenAI:\n\ndictionary update sequence element #0 has length 1; 2 is required

使用letsencrypt申请免费证书

安装

brew install certbot

django 安装prometheus exporter

https://github.com/korfuri/django-prometheus

新增路径

path('prometheus/', include('django_prometheus.urls')),

查看

http://0.0.0.0:8101/prometheus/metrics

最后想了想

其实在 nginx 上装就行了 放弃

openid 原理 以及和 nginx 集成

OpenID是一种用于实现单点登录(Single Sign-On)的开放标准。它允许用户在一个网站上使用他们的身份验证信息(如用户名和密码)来登录到其他网站,而无需重新输入凭据。OpenID的实现原理如下:

-

用户注册OpenID:用户在一个支持OpenID的网站上注册一个OpenID账号,这个OpenID账号通常是一个URL。

-

认证提供者:OpenID认证提供者是负责验证用户身份的网站。当用户想要登录到另一个网站时,这个网站会将用户重定向到用户注册的OpenID认证提供者。

-

认证流程:用户在OpenID认证提供者的网站上输入他们的凭据,然后认证提供者验证这些凭据。一旦验证成功,认证提供者会生成一个令牌(token)并将用户重定向回原始网站,同时将令牌发送给原始网站。

-

令牌验证:原始网站收到令牌后,会向OpenID认证提供者验证令牌的有效性。如果令牌有效,用户就可以被认为已经登录到原始网站。

通过这种方式,用户可以在多个网站之间共享他们的身份验证信息,从而实现单点登录。OpenID的实现原理基于标准的身份验证协议和加密技术,确保用户的身份信息得到安全地传输和验证。

请注意,OpenID在某些情况下已经被OAuth和OpenID Connect取代,这些协议提供了更多的功能和安全性。如果您需要更多关于身份验证和单点登录的信息,欢迎继续提问。

qdrant 安装和部署

Qdrant是一个开源的向量数据库,专门用于存储和检索高维向量数据。它提供了一种高效的方式来处理大规模的向量数据,例如图像、文本、音频等。以下是Qdrant的一些功能和特性:

-

高维向量存储:Qdrant专注于存储和检索高维向量数据,能够有效地处理数百甚至数千维的向量。

-

相似度搜索:Qdrant支持根据向量之间的相似度进行搜索和检索,可以快速找到与查询向量最相近的向量。

-

多种索引算法:Qdrant支持多种索引算法,包括HNSW(Hierarchical Navigable Small World)和FAISS(Facebook AI Similarity Search)等,以提高检索效率。

-

分布式存储:Qdrant可以在分布式环境中部署,支持数据的分片和复制,以提高系统的可扩展性和容错性。

-

RESTful API:Qdrant提供了基于RESTful API的接口,使用户可以方便地与数据库进行交互和管理。

-

灵活的配置选项:Qdrant提供了丰富的配置选项,用户可以根据自己的需求进行调整和优化。

-

开源和可扩展:Qdrant是一个开源项目,用户可以根据自己的需求对其进行定制和扩展。

安装使用

https://qdrant.tech/documentation/guides/installation/

docker run -it -d \

--name qdrant \

-p 6333:6333 \

-p 6334:6334 \

-v ./qdrant_data:/qdrant/storage \

qdrant/qdrant

访问

http://127.0.0.1:6333/dashboard

功能

以下是一个简单的Python示例,演示如何使用Qdrant加载文档数据并进行相似度搜索以及Range搜索:

import requests

# 定义Qdrant的API地址

QDRANT_API_URL = 'http://localhost:6333'

# 加载文档数据

def load_documents(documents):

response = requests.post(f'{QDRANT_API_URL}/collection', json={'documents': documents})

return response.json()

# 进行相似度搜索

def search_document(query_vector, top_k=5):

response = requests.post(f'{QDRANT_API_URL}/search', json={'vector': query_vector, 'top': top_k})

return response.json()

# 进行Range搜索

def range_search_document(min_range, max_range, top_k=5):

response = requests.post(f'{QDRANT_API_URL}/search', json={'range': {'min': min_range, 'max': max_range}, 'top': top_k})

return response.json()

# 示例文档数据

documents = [

{'id': '1', 'vector': [0.1, 0.2, 0.3], 'text': 'Document 1'},

{'id': '2', 'vector': [0.4, 0.5, 0.6], 'text': 'Document 2'},

{'id': '3', 'vector': [0.7, 0.8, 0.9], 'text': 'Document 3'}

]

# 加载文档数据

load_documents(documents)

# 查询文档

query_vector = [0.2, 0.3, 0.4]

result = search_document(query_vector)

print(result)

# Range搜索文档

min_range = 0.4

max_range = 0.6

result = range_search_document(min_range, max_range)

print(result)

在这个示例中,我们首先定义了Qdrant的API地址,并编写了三个函数:load_documents用于加载文档数据,search_document用于进行相似度搜索,range_search_document用于进行Range搜索。然后我们定义了一些示例的文档数据,加载文档数据并查询一个文档的相似文档以及进行Range搜索。最后打印出搜索结果。

Qdrant是一个向量索引库,它的搜索功能是基于向量相似度的。因此,查询向量(query_vector)通常是一个数值向量,表示待搜索的文档或查询的特征。如果要将文字转换为向量进行搜索,可以使用文本嵌入模型(如Word2Vec、BERT等)将文字转换为数值向量,然后再进行搜索。在实际应用中,可以先将文字转换为向量,然后再将向量传递给Qdrant进行相似度搜索。

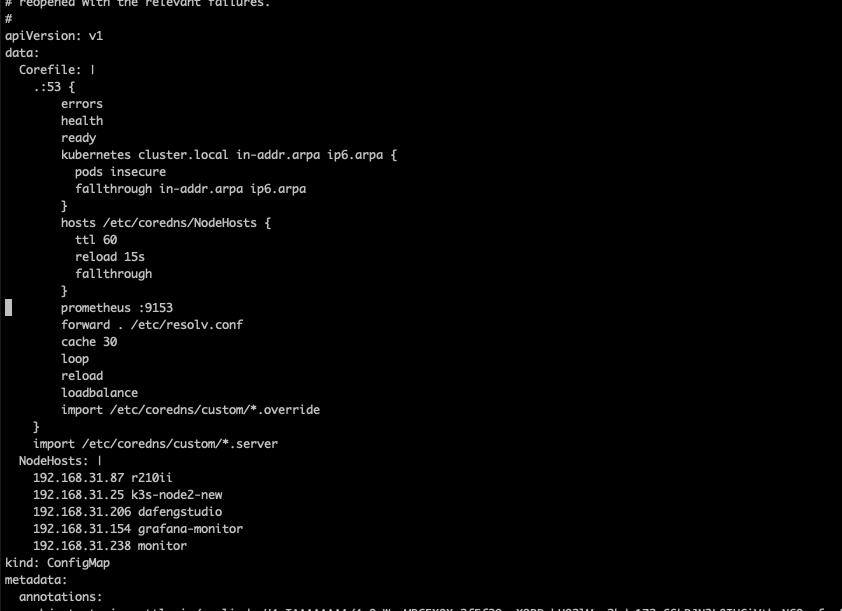

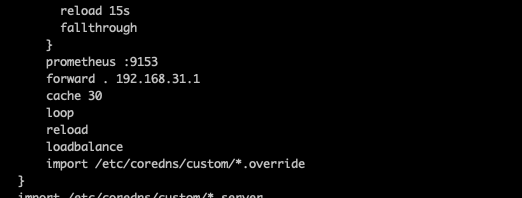

k8s-core-dns 支持 外部 dns 解析

修改 配置

修改 forward 配置

arthas 排查 java 应用性能问题

下载 https://github.com/alibaba/arthas/releases

使用

NAME DESCRIPTION

help Display Arthas Help

auth Authenticates the current session

keymap Display all the available keymap for the specified connection.

sc Search all the classes loaded by JVM

sm Search the method of classes loaded by JVM

classloader Show classloader info

jad Decompile class

getstatic Show the static field of a class

monitor Monitor method execution statistics, e.g. total/success/failure count, average rt, fail rate, etc.

stack Display the stack trace for the specified class and method

thread Display thread info, thread stack

trace Trace the execution time of specified method invocation.

watch Display the input/output parameter, return object, and thrown exception of specified method invocation

tt Time Tunnel

jvm Display the target JVM information

memory Display jvm memory info.

perfcounter Display the perf counter information.

ognl Execute ognl expression.

mc Memory compiler, compiles java files into bytecode and class files in memory.

redefine Redefine classes. @see Instrumentation#redefineClasses(ClassDefinition...)

retransform Retransform classes. @see Instrumentation#retransformClasses(Class...)

dashboard Overview of target jvm's thread, memory, gc, vm, tomcat info.

dump Dump class byte array from JVM

heapdump Heap dump

options View and change various Arthas options

cls Clear the screen

reset Reset all the enhanced classes

version Display Arthas version

session Display current session information

sysprop Display and change the system properties.

sysenv Display the system env.

vmoption Display, and update the vm diagnostic options.

logger Print logger info, and update the logger level

history Display command history

cat Concatenate and print files

base64 Encode and decode using Base64 representation

echo write arguments to the standard output

pwd Return working directory name

mbean Display the mbean information

grep grep command for pipes.

tee tee command for pipes.

profiler Async Profiler. https://github.com/jvm-profiling-tools/async-profiler

vmtool jvm tool

stop Stop/Shutdown Arthas server and exit the console.

jfr Java Flight Recorder Command

trace some.class somemethod

promtail 收集 k8s 日志踩坑

创建服务账号

apiVersion: v1

kind: ServiceAccount

metadata:

name: promtail-serviceaccount

namespace: monitor

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: promtail-clusterrole

#namespace: monitor

rules:

- apiGroups: [""]

resources:

- nodes

- services

- pods

- namespaces

- configmaps

- jobs

- cronjobs

- persistentvolumeclaims

- ingresses

- deployments

- replicationcontrollers

verbs:

- get

- watch

- list

- apiGroups:

- apps

resources:

- deployments

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: promtail-clusterrolebinding

#namespace: monitor

subjects:

- kind: ServiceAccount

name: promtail-serviceaccount

namespace: monitor

- kind: User

name: danny

#- kind: ServiceAccount

# name: promtail-serviceaccount

# namespace: foldspace-apps

#- kind: ServiceAccount

# name: promtail-serviceaccount

# namespace: default

#- kind: ServiceAccount

# name: promtail-serviceaccount

# namespace: dapr-system

#- kind: ServiceAccount

# name: promtail-serviceaccount

# namespace: openfunction

#- kind: ServiceAccount

# name: promtail-serviceaccount

# namespace: kube-system

#- kind: ServiceAccount

# name: promtail-serviceaccount

# namespace: kubernetes-dashboard

#- kind: ServiceAccount

# name: promtail-serviceaccount

# namespace: kube-public

#- kind: ServiceAccount

# name: promtail-serviceaccount

# namespace: ingress-apisix

roleRef:

kind: ClusterRole

name: promtail-clusterrole

#name: admin

apiGroup: rbac.authorization.k8s.io

创建部署

apiVersion: v1

kind: ConfigMap

metadata:

name: promtail-configmap

namespace: monitor

data:

promtail.yaml: |-

server:

http_listen_port: 9080

grpc_listen_port: 0

positions:

filename: /tmp/positions.yaml

clients:

- url: http://192.168.31.203:3100/loki/api/v1/push #${ip}填入loki的对应地址

scrape_configs:

- job_name: kubernetes-pods-app

pipeline_stages:

- docker: {}

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: drop

regex: .+

source_labels:

- __meta_kubernetes_pod_label_name

- source_labels:

- __meta_kubernetes_pod_label_app

target_label: __service__

- source_labels:

- __meta_kubernetes_pod_node_name

target_label: __host__

- action: replace

replacement: $1

separator: /

source_labels:

- __meta_kubernetes_namespace

- __meta_kubernetes_pod_name

target_label: job

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: namespace

- action: drop

regex: ''

source_labels:

- __service__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- replacement: /var/log/pods/*$1/*.log

separator: /

source_labels:

- __meta_kubernetes_pod_uid

- __meta_kubernetes_pod_container_name

target_label: __path__

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: promtail-log-collector

namespace: monitor

labels:

app: promtail

spec:

selector:

matchLabels:

app: promtail

type: daemonset

author: danny

template:

metadata:

labels:

app: promtail

type: daemonset

author: danny

spec:

containers:

- name: promtail

image: registry.dafengstudio.cn/grafana/promtail:2.9.2

args:

- -config.file=/etc/promtail/promtail.yaml

- -config.expand-env=true

env:

- name: HOSTNAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: spec.nodeName

- name: TZ

value: Asia/Shanghai

ports:

- containerPort: 3101

name: http-metrics

protocol: TCP

securityContext:

#readOnlyRootFilesystem: true

runAsGroup: 0

runAsUser: 0

volumeMounts:

- mountPath: /etc/promtail

name: promtail-configmap

- mountPath: /run/promtail

name: run

- mountPath: /var/lib/kubelet/pods

name: kubelet

readOnly: true

- mountPath: /var/lib/docker/containers

name: docker

readOnly: true

- mountPath: /var/log/pods

name: pod-log

readOnly: true

- name: timezone

mountPath: /etc/localtime

volumes:

- configMap:

defaultMode: 420

name: promtail-configmap

name: promtail-configmap

- name: timezone

hostPath:

path: /usr/share/zoneinfo/Asia/Shanghai

- hostPath:

path: /run/promtail

type: ""

name: run

- hostPath:

path: /var/lib/kubelet/pods

type: ""

name: kubelet

- hostPath:

path: /var/lib/docker/containers

type: ""

name: docker

- hostPath:

path: /var/log/pods

type: ""

name: pod-log

serviceAccount: promtail-serviceaccount

serviceAccountName: promtail-serviceaccount

updateStrategy:

type: RollingUpdate

最后发现 好几个 namespace 的 日志怎么也收集不上开

原来发现必须要 打标 app 才可以收到